Append to a Static List in a YAML CloudFormation Template

When writing CloudFormation stack templates, I sometimes need to create a list combining things defined at runtime and static values.

Imagine you have a template that contains a mapping, which enumerates IAM roles by environment. You want to grant permission to these roles as well as one or more Lambda execution roles. Can you create a list comprised of the static values defined in your map with references to roles created as part of your stack?

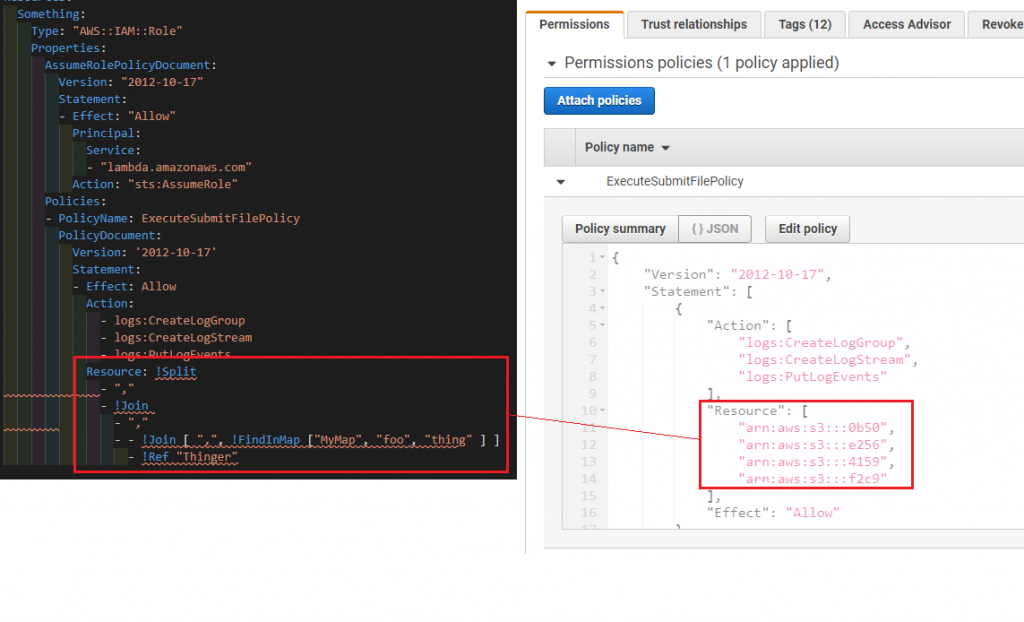

The FindInMap intrinsic function returns a set when the mapped value is a list, such as in our example. The Join function creates a string composed of the elements in the set separated by a given value.

You may perform a join on a set returned from the FindInMap function, returning a string composed of the elements in the set delimited by comma. You can then join the comma delimited string with a list of values. This second list can include references to resources created in the template.

!Join

- ","

- - !Join [",", !FindInMap ["MyMap", "foo", "thing"]]

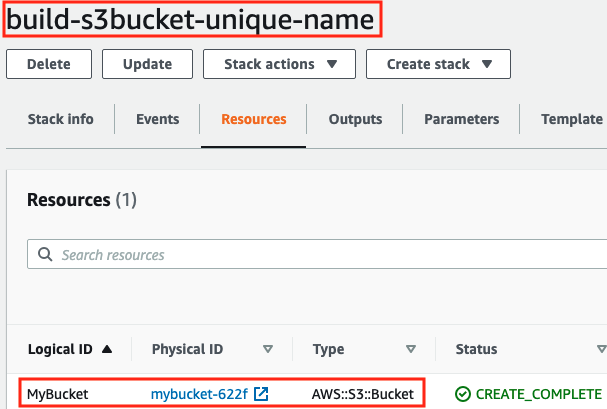

- !Ref "Thinger"The following shows a CloudFormation stack template using this technique juxtaposition to an instance of the provisioned resource..

Notice the provisioned resource is a superset of the two lists. The following is the complete template:

Description: Sample Stack

Parameters:

Thinger:

Type: "String"

Default: "arn:aws:s3:::f2c9"

Mappings:

MyMap:

foo:

thing:

- "arn:aws:s3:::0b50"

- "arn:aws:s3:::e256"

- "arn:aws:s3:::4159"

thang:

- "arn:aws:s3:::8199"

- "arn:aws:s3:::d9f1"

- "arn:aws:s3:::bc2b"

bar:

thing:

- "arn:aws:s3:::bd69"

- "arn:aws:s3:::eb00"

- "arn:aws:s3:::0f55"

thang:

- "arn:aws:s3:::5ebc"

- "arn:aws:s3:::4ccb"

- "arn:aws:s3:::85c2"

Resources:

Something:

Type: "AWS::IAM::Role"

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: "Allow"

Principal:

Service:

- "lambda.amazonaws.com"

Action: "sts:AssumeRole"

Policies:

- PolicyName: ExecuteSubmitFilePolicy

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Action:

- logs:CreateLogGroup

- logs:CreateLogStream

- logs:PutLogEvents

Resource: !Split

- ","

- !Join

- ","

- - !Join [",", !FindInMap ["MyMap", "foo", "thing"]]

- !Ref "Thinger"

Outputs:

UnifiedList:

Value: !Join

- ","

- - !Join [",", !FindInMap ["MyMap", "foo", "thing"]]

- !Ref "Thinger"

The utility of this technique is debatable. That said, it’s a useful pattern for joining two sets in a CloudFormation stack template.